Diversity also matters in AI and Machine Learning

- Marie Tseng

- Jan 28, 2019

- 2 min read

Updated: Apr 17, 2023

“People expected AI to be unbiased; that’s just wrong,” Joanna Bryson says. “If the underlying data reflects stereotypes, or if you train AI from human culture, you will find these things.” *

I had interesting conversations with my children over the winter holidays on that topic. Both are studying and working in computer science and artificial intelligence. There are so many questions that surround this field when it comes to ethics and implication on our society that it was enlightening to hear their views.

It seems that one of the many challenges that this industry is facing will be to address the issue of biases.

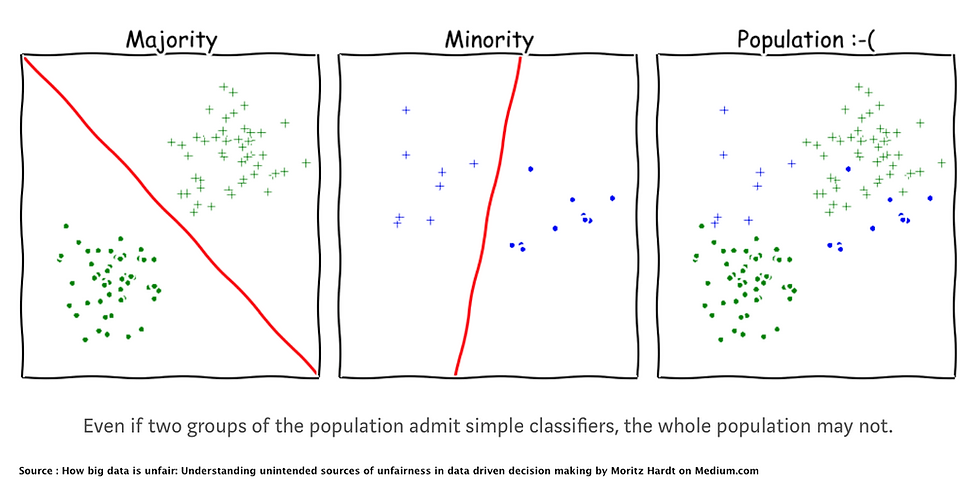

Machines learn from the data they are being fed. It looks for patterns in that data in order to make diagnostics and/or predict future behaviours. The patterns will emerge from the most common behaviours (that of the majority of the data) – this has 2 serious implications:

How do we ensure that the data collected is not biased or discriminatory ?

If the data looks at the behaviours of the majority, how will the predictions/decision making affect the minorities ( and I am not just talking about ethnicities, or gender here)? Is AI able to make sense of minority data ?

According to Sanjay Srivastava on of the solution is diversity :

“ Diversity in the teams working with AI can also address training bias. When we only have a select few working on a system, it becomes skewed to the thinking of a small group of individuals. By bringing in a team with different skills and approaches, we can have a more holistic, ethical design and come up with new angles.”

AI and machine learning are becoming key tools in how we make decision in many sectors from health, agriculture and some people are already looking at using in criminal law.

It is hence crucial that we have clarity on how biases/discrimination affects this new tool and do our utmost to correct this discrimination. Building truly diverse and inclusive teams will help.

References for this article:

Comments